Updated April 1st, 2024 — version 338

The UnFair Advantage Book

Winning the Search Engine Wars

Chapter Seven

Chapter Seven

On-page, Internal Ranking Signals

Back in Chapter Four you learned about some of the ranking signals. You may remember that we used hypothetical dials to illustrate their relative importance.

In some cases the dial-maximums were set high, in other cases the dial-maximums were set low. We even showed you dials that could register a negative ranking score. In this chapter you'll learn about all of the important internal (i.e., on-page) ranking signals and their relative importance on the current algorithm dial.

Let's start by defining Internal Ranking signals. These are variable page elements found within your site's webpages. You have total control over all of these elements since they exist completely within the realm of your website. The internal ranking signals covered in this chapter should be regarded as essential elements to your site's optimized web presence. Any internal factor NOT covered here should be considered not significantly important.

The Title Tag

The <Title Tag> has always been, and still is the #1 most important internal ranking signal. Within the source code of your webpage the title tag looks like this:

<title>Your title tag keywords go here</title>.

The title tag is intended to tell the search engine what the page is about. That's why you should put your most important keywords in the title tag. If your page topic is about steel rebar, then the keywords steel rebar should be included in the title tag.

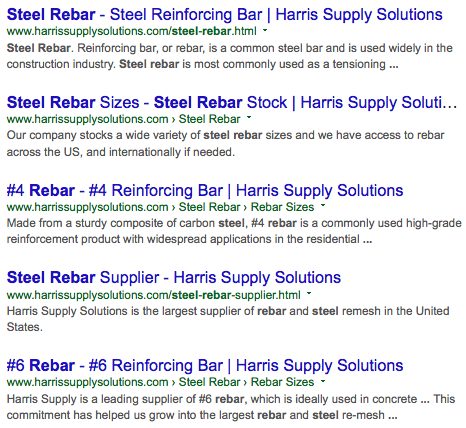

Below is an example of the title tags used by a real-world company that managed to rank 5 of their webpages in the Top 10 search results for the keyword steel rebar:

<title> Steel Rebar - Steel Reinforcing Bar | Harris Supply Solutions </title>

<title> Steel Rebar Sizes - Steel Rebar Stock | Harris Supply Solutions </title>

<title> #4 Rebar - #4 Reinforcing Bar | Harris Supply Solutions </title>

<title> Steel Rebar Supplier - Steel Reinforcement Supplier | Harris Supply Solutions </title>

<title> #6 Rebar - #6 Reinforcing Bar | Harris Supply Solutions </title>

Notice that NONE of the title tags are identical. This is important. You should never have any duplicate title tags anywhere on your website! Duplicate title tags are confusing to search engines and viewed as an error which can negatively affect your search rankings.

Generally speaking you should limit your title tags to 65 characters. This is intended as a guideline. You might notice that the fourth title tag listed above exceeds the 65 character limit. But it should also be noted that the most important keywords are arranged toward the beginning of each title tag just as they should be.

Always remember the content within your title tag is frequently the text that appears as a link in the search results.

The following screenshot illustrates how Google pulls content from the title tag to provide your descriptive link.

If you compare these links to the title tags listed above, you'll see how Google adapts them in whatever manner they deem appropriate. In many cases they'll simply shorten them while in other cases they'll delete a portion. Sometimes they'll pull content from within your page that matches the search query and add it to the descriptive link. In this screenshot you may notice that Google truncated the link description in the second result because the title tag exceeded 65 characters.

Also good to know is that sometimes Google won't use any of your title tag. Instead they'll insert their own version of your link description to better match the content of the search query. However, they always use your actual title tag for ranking purposes even if they rewrite it in the link description.

This means that sometimes Google might add your company name or the keywords used in the search query or maybe pull content from a headline tag or an inbound link's anchor text if the page's title tag is lacking content that's relevant to the search query.

They are not messing with your source code and whatever they insert does not factor into the ranking algorithm. It's simply their way of making your descriptive link on the search engine results page (SERP) a closer match to whatever keywords the searcher is using.

So don't get upset if Google uses their own title to describe your link. Nothing is wrong, Google routinely makes these kinds of adjustments to "provide a good user experience" for its site visitors. Fortunately in most cases this works to your benefit because people are more likely to click links that match their search queries.

The Meta Description Tag

While not nearly as important to ranking as the Title tag, the Meta Description tag should never be overlooked. It's frequently the source from where Google pulls the text that's displayed directly below your descriptive link. Think of it as the enticement for clicking if the content of your descriptive link isn't already compelling enough. The Meta Description tag frequently provides that block of text.

Within the source code, it looks like this:

<meta name="description" content="A good meta description tag entices the searcher to click the link by describing what they'll find when they view the page."/>

Therefore, the Meta Description tag is important because it can affect click-through rates once your pages are actually found in the search engines. Remember, it doesn't do any good to rank well if your links don't get clicked.

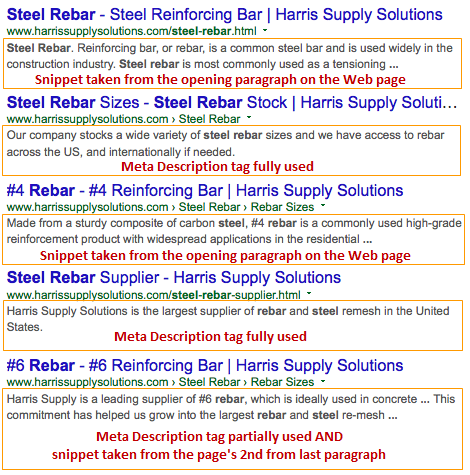

Below we see the Meta Description tag was used fully or partially in three out of five page descriptions.

Take note that Google also pulled snippets of text from the body content. On two of the pages they pulled the snippets from text located at the beginning. Then, on one of the pages, they pulled the snippet from text located near the end of the page.

This tells us that a webpage's opening and closing text content is important in regards to SERP (search engine results page) descriptions and can be used to entice clicks.

Be aware that...

your keywords in the meta description tag are NOT a ranking signal. However, they ARE important in regards to getting click-throughs.

You should also know that, if the keyword used in the search query is missing from the meta tag, Google will often grab a snippet of text from the webpage to better match the search query.

Keywords in the Domain Name as a Ranking Signal

The importance dial has been turned to almost zero in regards to generic keywords in the domain name. In other words, something like buycheapairlinetickets.com not only shouldn't be expected to provide any ranking advantage, it would actually look spammy to Google.

Instead, Google looks at the content quality of the webpage and the quality of the incoming links when evaluating the relevance of a webpage.

The exception is domain name keywords that match a unique brand name. For example, when searching for pepsi, having Pepsi.com as the domain name definitely helps their website rank at the top of the organic search results.

So, one of the best online marketing strategies is to figure out ways to get people to use your brand name to search for what you're selling.

Headline Tags

It's important to place your best keywords in your <H1> (headline) tag because Google looks for keywords in the <H1> tag and oftentimes pulls your snippet from this area of your page.

We recommend using the <H1> tag only once per page — and to place it somewhere near the beginning of the body text.

Conversely, you should avoid placing the <H1> in the left or right rail content where we often see the navigation portion of the page.

Ideally your keywords should appear early in the headline but keep in mind the headline must read well to site visitors or else it will hurt sales. So the rule is...

create your headline to attract attention to your product first — and to please Google's algorithm second.

By the way, as you probably know, an <H1> headline can appear on a page as disturbingly HUGE. In most cases, the font size is too large in terms of creating an aesthetically pleasing webpage design. The work-around involves CSS (cascading style sheets). By using CSS to adjust the font size of the headline to align with the design goals of the page, you can address both design and SEO concerns at the same time. And, in case you are wondering, using CSS to reduce your <H1> font size is perfectly ok with Google.

While the <H1> tag can help your ranking, <H2> and <H3> tags are less effective. Regardless, it's ok to use them, they might help a little and they certainly won't hurt. But you shouldn't expect a boost of any significance from keywords in headline tags other than the <H1>.

On-page Anchor Text

The keyword text within your on-page links — the anchor text — provides a bit of help, ranking-wise. But, since Google knows this is easily manipulated, they don't turn the dial up very high on the algorithm. Regardless, it will usually help and not hurt your ranking efforts provided that you do not abuse the strategy. If Google thinks your on-page anchor text is there to manipulate rankings, they can penalize you. Therefore, we recommend that you limit your keywords within your anchor text to only a few per page. Any more than that could be counterproductive.

Keywords in Body Text

As you might imagine, having your keywords in your body text (the page content) is also important. This is what Google indexes and uses to determine if a page is relevant to the search query. In regards to on-page ranking signals, the keywords found within page content are typically a medium to strong ranking signal on the algorithm dial.

However, it's a bad idea to stuff or repeat an excessive number of keywords. Doing so will get your webpage penalized. It's best to sprinkle in your keywords naturally in ways that sound comfortably conversational when you read it out loud. Otherwise your page's quality score will suffer and its ranking will be hurt.

It's best to place your keywords toward the beginning of the body copy. It can also be beneficial to place them toward the end of the text as well. If it seems natural to use them in other locations, then do so. Just be sure to avoid using them in the way that a used car salesman might overuse your name when trying to sell you something. If it sounds a little creepy when you read it out loud, then you've probably repeated your keywords too frequently.

Images

Images are an often overlooked ranking factor. While it's true that search engines can't "see" images, they can see the filenames and the Alt tag. Therefore you should name your image files by using applicable keywords like keyword.jpg.

Remember that some people use Image Search as their primary search vehicle. In such cases you'll want your images to rank well because top ranking images are another great way to drive traffic to your site. The Alt tag provides an opportunity to associate keywords with your images.

<img src='/images/Z4M40i.jpg' Alt='BMW Z4 M40i'>

The image Alt tag (actually it's an attribute although most people call it a tag) is also an accessibility issue. In order to make your image Alt tags screen-reader friendly for users who are visually impaired, it's recommended that you describe what a sighted person would see when viewing the image. As such, the image Alt tag in our example above could be expanded to;

<img src='/images/Z4M40i.jpg' Alt='White BMW Z4 M40i Roadster Convertible with 3.0-liter TwinPower Turbo inline 6-cylinder, Rear-Wheel Drive and black leather interior'>

As you can see, keywords in Alt text can be optimized to provide better accessibility while at the same time also chosen to help your images rank better in image search.

Take care not to repeat keywords within the Alt tag and try to use keywords that are different from the file name and the image captions so that screen readers aren't repeating the same text over again — something that can be annoying to site visitors who are visually impaired.

In addition, you can help the search engines index your images more completely by using an XML image site map. For in-depth information on this topic, take a look at these articles (requires SEN Membership):

Keyword Density

Keyword Density is a ratio that is calculated by dividing the number of times your target keyword appears on the page by the number of total words on the page. For instance, if your keyword appears 10 times in a page with a total of 500 words, the keyword density is 2% (10/500=0.02).

Although a perfect keyword density ratio was, in the past, an important ranking factor, today that's not the case.

Our best advice regarding the ideal keyword density is to simply make it higher than any other word that appears on your page.

If you're selling rebar, then the keyword rebar should have the highest keyword density ratio. This ensures the search engines will accurately determine the topic of the webpage.

Remember to keep it natural. Your page content should make sense to humans when they read it. Avoid hammering any keyword too much and don't stress over trying to get the perfect keyword density ratio because there is no such thing as a perfect ratio anymore.

URL Structure

Using a simple URL that includes your targeted keywords will typically provide a slight to medium boost in ranking. Looking at our rebar example, we see the keyword is used in all five of the Harris Supply Solutions URLs in the top 10 as such:

- http://www.harrissupplysolutions.com/steel-rebar.html

- http://www.harrissupplysolutions.com/steel-rebar-sizes-stock.html

- http://www.harrissupplysolutions.com/4-rebar.html

- http://www.harrissupplysolutions.com/steel-rebar-supplier.html

- http://www.harrissupplysolutions.com/6-rebar.html

Don't get carried away trying to stuff too many keywords into the URL. Remember that when the URL becomes too long, it's difficult to direct someone over the phone where to go. In addition, long URLs can be difficult to use in an email because the link tends to break at the hyphen if it uses more than one line. So, with these considerations in mind, it's a good idea to include your keywords in the URL provided that you take the conservative approach.

Uniqueness of Content

Having unique content is critically important. That's because search engines tend to view duplicate content as a waste of their indexing resources and counter to what their searchers are looking for. They correctly reason that nobody wants to search for a red widget and find hundreds of red widget pages that are all alike. The search engines want to provide searchers with a variety of unique pages. This might include;

- red widget product,

- red widget specifications,

- red widget reviews,

- red widget discounts,

- red widget videos

...and so forth.

Of course if you're writing a blog and producing original content of your own, then uniqueness is easy. But if you're one of a thousand sites selling a name-brand product, then creating uniqueness is going to be more challenging.

In such cases, the key to overcoming the challenge is in the product descriptions. While it's true that many websites have hundreds of merchants listing the same products in their shopping carts, you will find that...

only pages with unique product descriptions will typically rank well

...while those that use the brand-name-suggested descriptions are either filtered out or buried in the rankings. And, by the way, this applies to images as well.

So, if you are selling something that a lot of others are also selling then you must rewrite the product descriptions and rename the product image files so that your product pages and images are not filtered out of the search results as duplicate content.

In cases where it isn't allowed to change the product description, you can add content to make the page unique. For instance, some sites add user reviews and product demonstration videos. By enriching the manufacturers content you can make your page unique and more deserving of a good ranking.

True, this requires a bit more work. But if you don't do it, then you can't expect to rank well because you're probably competing with the likes of Amazon and perhaps also the name brand company that manufactures the product.

Mobile Compatibility

If there's one ranking dial that's turned all the way up, it's Mobile Compatibility.

Mobile "friendliness" is the most important 'on-page' dial of them all. It's critically important that your webpages display properly when viewed on ALL devices but especially on mobile devices.

Google has committed to providing searchers a quality experience when using their smartphones and tablets. This means your site design must be responsive to all devices if you want your webpages to rank well.

In addition, and as we explained in Chapter Four when we talked about the Page Experience algorithm, your webpages must load fast as measured by the PageSpeed Insights tools. Page Speed is one of your site's core web vitals and considered an important element of Mobile compatibility.

It's always a good idea to check your site's functionality on as many devices as possible. That includes Android and iPhone as well as tablets of all sizes.

Using the Chrome browser you can do a quick check by right mouse clicking on a webpage and selecting Activate the mobile view on this page as seen in left panel of the image below...

Once activated, as seen in the right panel of the image above, you have a fully functioning mobile phone simulator which enables you to tap the links and see what you'd see if you were looking at the webpage with a mobile phone.

Spelling, Grammar and Readability

Spelling, grammar, and readability are quality signals factored into Google's ranking algorithm.

We already know that spelling and grammar checkers are very basic features of word processing. Considering that mathematical formulas like the Flesch-Kincaid test can analyze a document for readability, there's every reason to believe that Google calculates a readability score based on the average number of syllables used per word and the number of words used per sentence.

Couple all that with the fact that Google's Advanced Search provides the option to filter search results by reading level, there's every reason to believe that Google can easily factor spelling, grammar, and readability into their algorithm. And then it stands to reason that, the higher the readability level, the higher the quality of the content — which leads to higher rankings.

Page Freshness as a Ranking Factor

Earlier we mentioned Query Deserves Freshness (QDF) search results and how that relates to hot topics. Since the search engines love new content, it only stands to reason that newer (i.e, fresh) content will have a ranking advantage over older "stale" content.

Therefore it's always to your advantage to update your best pages as often as is practical based on the type of content you're presenting. The more up-to-date your webpages, the better you can expect them to rank. On the flip side, the reverse is true. You should do everything in your power to avoid having stale, out-of-date content on your site because that will definitely hurt your rankings.

Pay very close attention to dates. Copyright, articles, reviews, and product pages that reference dates can be a problem if they indicate anything other than the current year or recent months. Your credibility and your rankings will suffer if you're touting the best widget for 2021 if we're already in 2022. You get the idea.

Geolocation Signals

In most cases, especially for businesses that attract customers locally, it's important to include geolocation signals like your address and phone in addition to your company name. This is typically referred to as your NAP (name, address, phone).

Your NAP should be displayed in several locations on your site.

Be sure to make it consistent!

The search engines do NOT like multiple phone numbers or locations. It's confusing to their database and your rankings can suffer. If a location keyword is an element of your customers' searches, you should also include it in your title tags. And, if your geographic location has a nickname or slang term, be sure to work that into your content as well.

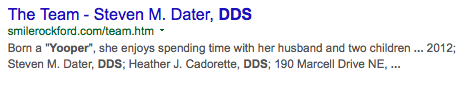

For instance, if you're a dentist in the upper peninsula of Michigan, then you know the term Yooper refers to residents of the local region. As such, a search for Yooper dentist produces the following top result:

Notice how they've worked the slang term for the geographic location neatly into their content.

Spider Friendly Website Architecture

Your site's layout, aka architecture is important. While it's obvious you should make it easy for site visitors to navigate, it's critical that you make it easy for search engine spiders to find all of your pages as they crawl and index your site.

Spiders/bots/crawlers can find, follow, and interpret text links best. These are links with normal anchor text.You should avoid JavaScript and Flash Menus when possible. Although Search Engines have made great strides in finding links in JavaScript, it can still be problematic.

If you're using a JavaScript Menu, you should test it by turning off JavaScript in your browser. If you can't see the links, then neither can the spiders.

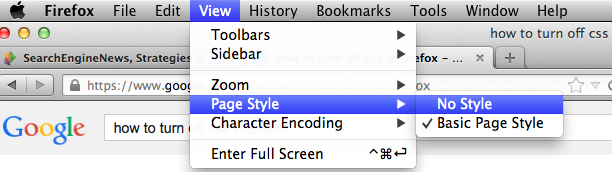

CSS menus can often achieve the design goals that you're looking for without sacrificing crawlability. Drop down links, created using CSS, are easily found, followed and interpreted. If ever you're in doubt about this, simply disable CSS in your browser and take a look at the page. Below you see what the top of this book looks like with and without CSS turned on.

The image on the left conceals the links under the menu button on the navigation bar. The image on the right, with the CSS turned off, shows the links as a search engine spider would seen them — plain text links.

Here's the path for disabling CSS in Firefox. Select No Style.

Go here to learn how to disable CSS in Chrome

Once you've disabled CSS, you should see the links as normal looking anchor text links when the page is viewed. If you can see them, then so can the search engine spiders and you're good to go.

Image-links are also easily found and followed. However, spiders can't always tell what the image-link is about unless there are clues like keyword.jpg type file names and a description added to the Alt image attribute.

You should always avoid using flash and flash movies for mobile compatible sites unless you're ONLY serving that content to compatible devices. And know that Google may penalize your site if you’re attempting to use flash for devices that don’t accept it.

Static Links vs. Dynamic links

A static link looks like this:

http://www.yoursite.com/directory/file.html

Of course the webpage in that link is: file.html. Static links offer many advantages over dynamic links. For starters, they make more sense to humans and therefore are more likely to get clicked. They help eliminate the problem of duplicate content, which is good because search engines hate duplicate content. Static links display better in print and other media advertising. And static links typically get broken less often than dynamic links because they are shorter and less likely to contain a lot of hyphens.

A dynamic link is generated on the fly by using a database to name the page on request and as needed. The link might look something like this:

http://www.yoursite.com/s/ref=nb_sb_noss/183-1484986-3953124?url=search-alias% 3Daps&field-keywords=keyword1%20keyword2

The webpage in that link is: 183-1484986-3953124?url=search-alias%3Daps&field-keywords=keyword1%20keyword2.

As you can see the link is much longer and it contains a lot of hyphens and other characters that are likely to break the link when spread across two lines. It's impossible to remember and difficult to convey over the phone.

It also wouldn't display well in print or any other form of advertising media and it could become duplicate content if the same search generated the same page but with a different assigned "dynamic" serial number. You get the idea.

But the worst disadvantage to dynamic links is when a spider gets caught in a loop. This happens when the spider finds a product link, indexes the dynamic URL, and then finds another link to the same product and indexes a different dynamic URL even though it's the same product page but with a different serial number.

And when this process happens again and again, the spider is said to be caught in a spider loop. This is bad in terms of getting your site properly indexed. Most spiders will leave your site to avoid such loops and therefore avoid indexing the rest of your site. Again, if your site isn't getting properly indexed then your webpages will NOT show up in the search results.

There are times, however, when dynamic links are desirable in terms of integrating product databases with webpage display. But the good news is there are workarounds. Many Content Management Systems (CMS) like Wordpress, for example, can be set up to display static looking URLs that are actually dynamic. If your system demands dynamic URLs, then we suggest you look into making them as simple, unique and people friendly as possible.

Your goal should be to design your site architecture so that spiders can find every page on your site by starting at the home page. That does not necessarily mean that your home page must link to all of your pages. It does mean that, by starting at the home page and following links to secondary pages, these secondary pages allow the spiders to eventually find all of your pages.

XML sitemaps

XML (Extensible Markup Language) is a type of markup language where tags are created to share information. An XML Sitemap tells the search engines what content you want indexed.

Theoretically the search engines should find all of your content by following links. But an XML sitemap can help speed up the process and reduce the chance of spiders missing some content that isn't easily indexed. This is especially true for getting content like images, videos, and product pages indexed.

Most experts agree that an XML Sitemap is essential for keeping the search engines up-to-date with your website changes. It helps to ensure that all of your important content is indexed and provides supplemental information (metadata) about your content.

By the way, you should not confuse a navigation site map with an XML Sitemap. The former is simply a page of links for your site visitors to use while navigating your site. The latter is a list-feed intended just for search engines and is not at all seen or used by site visitors.

Although XML sitemaps are not technically required, they are highly recommended. They provide useful metadata for the search engines and they're especially useful for content other than webpages. They're fairly easy to generate and there are plug-ins available for WordPress and other CMS systems to help you do this.

Suggested Reading: This 2019 article-tutorial provides step-by-step directions that are still the same today. To learn how to create and feed your XML sitemaps, take a look at:

Robots.txt

One of the earliest names given to search engine spiders, crawlers, and bots was robots. Thus, the function of a robots.txt file is to tell spiders what to do in regards to crawling and indexing pages on your site.

You might picture your robots.txt file as the tour guide to your site for the search engines. It provides a map that tells search engines where to find the content you want indexed. It also tells them to skip the content you don't want indexed — like duplicate content or resource pages you're providing to site visitors in exchange for their email address.

By telling the search engine bots what to index, and what not to index, you'll get a faster and more efficient indexing of your site.

If you do not have a robots.txt file, then the spiders will index everything. But regardless of whether this is what you want, we recommend that you have a robots.txt file anyway because the search engine spiders are looking for it.

There is a lot you can do with a robots.txt file to improve the efficiency of getting your site indexed. We highly recommend that you study and bookmark the following tutorial so that when the time comes to implement the various functions of robots.txt you'll be able to easily create the perfect file that will give you the results you're looking for.

URL Redirection

Redirects, sometimes referred to as URL forwarding, make a webpage available under more than one URL address. When attempting to visit a URL that's been redirected, a page with a different URL opens up. For example,www.yourolddomain.com is redirected to www.yournewdomain.com.

Redirects can be used to forward incoming links to a correct new location whenever they're pointed to an outdated URL. Such links might be coming from external sites that are unaware of the URL change. They may also be coming from bookmarks that users have saved in their browsers. Sometimes they're used to tell search engines that a page has permanently moved.

There are two kinds of redirects that you need to know about.

- Browser based redirects

- Server side redirects

Browser based redirects have fallen out of favor with the search engines due to their frequent use in manipulating the search rankings. For that reason, they can often do more harm than good. That's why we recommend that, if you use them, you'd better know what you're doing. Otherwise, you should avoid browser based redirects if your site is dependent on good rankings.

Server side redirects are safer and necessary to use in specific instances, like when a URL has moved. The two most common redirects are the 301 redirect and the 302 redirect.

Both of these are highly useful. We recommend that you study the tutorial below in order to gain a full working knowledge of how these valuable web developer tools can be safely applied. Consider it essential reading.

Duplicate Content

As previously mentioned, search engines hate duplicate content. Their thinking is that it wastes their resources and provides a bad user experience. That's why they tend to filter out and sometimes penalize sites that clog their index with duplicate content.

The biggest offenders are product pages that all carry the same product description. Google doesn't care where you buy the product. They only care that you aren't served the same product page coming from multiple websites.

So they look for the content originator and tend to favor the name-brand company that produces the product. Or, they prominently rank a well-known large site like Amazon.com. The rest of the pages selling the same item tend to get filtered out of the rankings.

We also mentioned how duplicate content issues might arise whenever a site uses a dynamic database system to create product pages on the fly. This should be avoided as well.

And, of course, any other content that duplicates what is already on another site should also be avoided. The bottom line is that Google is looking for original content. Anything that isn't original reflects badly on the overall site quality. So, you should see to it that your site contains only original content and not something that can be found elsewhere.

Canonical URL

In the parlance of SEO, Canonical is a hard-to-pronounce fancy word which simply means preferred. Your Canonical URL is your preferred URL.

You might be surprised to learn that, even though both of these URL's...

- domain.com

- www.domain.com

...serve visitors your home page's content, Google sees them as two different URLs. That's why you must choose your preferred URL — your canonical URL.

As you can see, the first one does not include the www, the second one does. Each of them is a variation of your home page but Google needs to know which one you prefer. And unless you tell Google which one is the canonical, they'll guess at it — and they might not guess right.

If you neglect to choose a canonical, and other sites point their links to both versions, your PageRank will be diluted because it's divided instead of combined. This will hurt your rankings.

Furthermore, Google will see two different URLs with the same content. This creates a potential duplicate content problem. They won't know which URL you want indexed — and this puts your site at a disadvantage in the rankings.

The solution is to log into your Google Search Console account and choose your Canonical URL — it doesn't matter which one you choose. Google doesn't care whether you use the www or not, but you must be consistent.

Then you simply redirect the traffic from the one you're not using to the one you've chosen to use. In addition, you must do your best to encourage sites to point their links at your canonical URL. That way you maximize your link juice (i.e, PageRank).